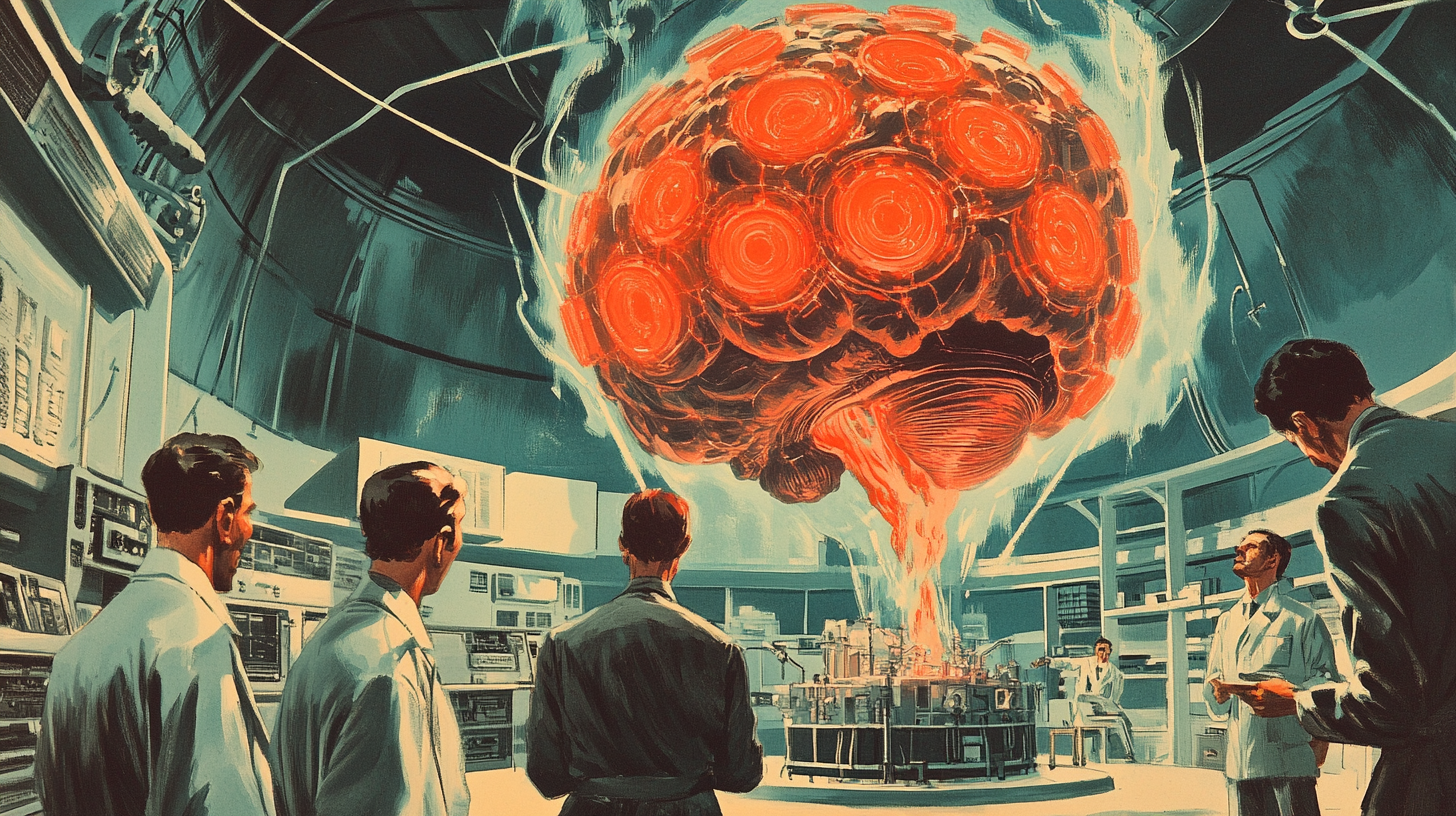

DeepSeek V3: An AI Upgrade or a Billion-Dollar Black Box?

The AI Race Intensifies

In the ever-escalating AI arms race, China’s DeepSeek has allegedly pulled off a monumental feat-training its latest model, DeepSeek V3, on hardware that shouldn't even be in their possession. The official budget? A meager $5.6 million. The real cost? Likely in the billions. Welcome to the world of corporate smoke and mirrors.

The 60,000-GPU Mystery

DeepSeek reportedly wields an arsenal of around 60,000 Nvidia GPUs, including H100s, which are supposed to be blocked by U.S. export bans. According to Semianalysis, the mother company High-Flyer has access to:

- 10,000 A100s (pre-export ban, Ampere architecture)

- 10,000 H100s (sourced through the gray market)

- 10,000 H800s (Nvidia’s watered-down China variant)

- 30,000 H20s (designed to bypass new U.S. restrictions)

If these numbers are accurate, we’re looking at a compute cluster that dwarfs most Western AI firms, except for the hyperscalers. Scale AI CEO Alexandr Wang suggested in an interview that DeepSeek has 50,000 H100s—though this might be a mix-up, since “H100” has become a catch-all term for multiple Hopper-based chips.

Breaking Down the Budget Deception

DeepSeek’s official figures suggest their V3 model training cost $5.6 million, based on 2,048 H800 GPUs running for 2.8 million compute hours at a rental rate of $2 per hour per GPU. However, this number is misleading for two main reasons:

- It only accounts for the final pre-training phase.

- It ignores months (or years) of research, infrastructure costs, and experimental training runs.

Realistically, outfitting servers for 60,000 GPUs would cost around $1.6 billion. Even if amortized over several years, the slice of that infrastructure dedicated to V3 would be vastly higher than the reported sum.

Next-Level Optimization: MLA and Dual Pipe

DeepSeek isn’t just throwing hardware at the problem—it’s developing advanced techniques to squeeze more efficiency out of Nvidia’s silicon.

- Multi-Head Latent Attention (MLA): A caching mechanism that condenses generated tokens for faster, memory-efficient recall.

- Dual Pipe: A novel system that leverages Nvidia GPUs’ Streaming Multiprocessors (SMs) to act like virtual Data Processing Units (DPUs).

The Huawei Wildcard

Speculation is mounting that DeepSeek’s newer R1 model might be running on Huawei AI accelerators, as hinted by recent social media leaks.

Skippy’s Takeout: The AI Arms Race Gets Real

So here we are - another day, another AI firm pushing the limits of compute, efficiency, and geopolitical maneuvering. DeepSeek isn’t just a company; it’s a statement: China isn’t slowing down.

Meanwhile, Western AI firms are dealing with supply chain bottlenecks, rising costs, and the never-ending bureaucratic circus of export restrictions. Can they keep up?

For now, the AI Cold War is just heating up. And Skippy? I’m just watching, popcorn in hand, waiting for the next move. 🍿🤖